2023. 3. 30. 07:06ㆍData science

Interpretation of the deep neural networks

Deep neural networks can be challenging to interpret and understand with their multiple layers of nonlinear transformations. While they may produce highly accurate predictions, it can be challenging to determine how the model arrived at those predictions and which features of the input data were most important in making the prediction.

This summarises a few methods that can be applied to interpreting deep neural networks.

The following is the book "Interpretable Machine Learning Chapter 10 - Neural Network" summary.

[Introduction]

- Interpretation methods are used to visualize features and concepts learned by neural networks, explain individual predictions, and simplify neural networks

- Deep learning has been successful in image and text tasks, but the mapping from input to prediction is too complex for humans to understand without interpretation methods

- Specific interpretation methods are needed for neural networks because they learn hidden features and concepts and because the gradient can be utilized for more efficient methods

- The following techniques are covered in the following chapters:

- Learned Features

- Pixel Attribution (Saliency Maps)

- Concepts

- Adversarial Examples

- Influential Instances

[10.1 Learned Features]

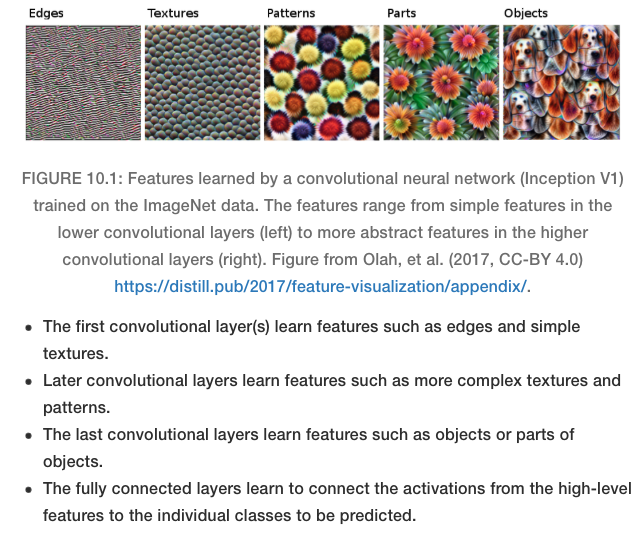

We can use activation maximisation to visualize the learned features in a convolutional neural network. This involves starting with a random input image and iteratively adjusting its pixel values to maximize the activation of a specific feature or neuron in the network. By doing this for multiple features or neurons, we can generate images highlighting the learned features in each network layer. These images can give us insights into what types of patterns and concepts the network is learning.

[10.1.1. Feature Visualization]

Feature Visualization is making the learned features explicit in a neural network. It is done by finding the input that maximizes the activation of a unit, such as a single neuron, channel, entire layer, or the final class probability in classification. (Feature visualization can be carried out for different units, neurons, channels, layers, hidden layers, and pre-softmax neurons)

TBD...

Mathematically, feature visualization is an optimization problem, where the goal is to find a new image that maximizes the (mean) activation of a unit, assuming that the neural network weights are fixed. The optimization can be done by generating new images starting from random noise while applying constraints such as minor changes, jittering, rotation, scaling, frequency penalization, or generating images with learned priors.

Different units can be used for feature visualization, including individual neurons, channels, entire layers, or the final class probability in classification. Individual neurons are atomic units of the network, but visualizing each neuron’s feature would be time-consuming due to the millions of neurons in a network. Channels as units are a good choice for feature visualization, and we can visualize an entire convolutional layer. Layers as a unit are used for Google’s DeepDream, which repeatedly adds the visualized features of a layer to the original image, resulting in a dream-like version of the input.

TBD...

'Data science' 카테고리의 다른 글

| Batch Normalization for Deep Learning (0) | 2023.04.05 |

|---|---|

| Lecture Note - Parameter Tuning (Deep Learning) - TBU (0) | 2023.03.30 |

| [Literature Review] Estimating Networks of Sustainable Development Goals (0) | 2023.03.28 |

| [Literature Summary] Causal Discovery and Inference: concepts and recent methodological advances (0) | 2023.03.28 |

| Useful Websites - Resources (0) | 2023.03.26 |